Artificial Intelligence

A Law Enforcement A.I. Is No More or Less Biased Than People

New research compares the COMPAS algorithm with humans.

Posted January 17, 2018

Some people champion artificial intelligence as a solution to the kinds of biases that humans fall prey to. Even simple statistical tools can outperform people at tasks in business, medicine, academia, and crime reduction. Others chide AI for systematizing bias, which it can do even when bias is not programmed in. In 2016, ProPublica released a much-cited report arguing that a common algorithm for predicting criminal risk showed racial bias. Now a new research paper reveals that, at least in the case of the algorithm covered by ProPublica, neither side has much to get worked up about. The algorithm was no more or less accurate or fair than people. What’s more, the paper shows that in some cases the need for advanced AI may be overhyped.

Since 2000, a proprietary algorithm called COMPAS has been used to predict criminal recidivism—to guess whether a criminal will be arrested for another crime. The ProPublica reporters looked at a sample of criminals from Florida. They found that among those who did not recidivate, blacks were nearly twice as likely as whites to have been mistakenly labeled by COMPAS as high-risk. And among those who did recidivate, whites were nearly twice as likely as blacks to be mistakenly labeled as low-risk. People were angered.

It’s since been reported that the algorithm may not be as unjust as first thought. Blacks and whites who received the same risk scores were equally likely to go on to reoffend, so there was no racial discrepancy there. Whether the algorithm is fair depends on how you measure fairness, and it turns out that it’s mathematically impossible to come up with an algorithm that satisfies all criteria. In any case, the authors of the new study, published today in Scientific Reports, wanted to know whether, for a given measure of performance, COMPAS was any worse than people.

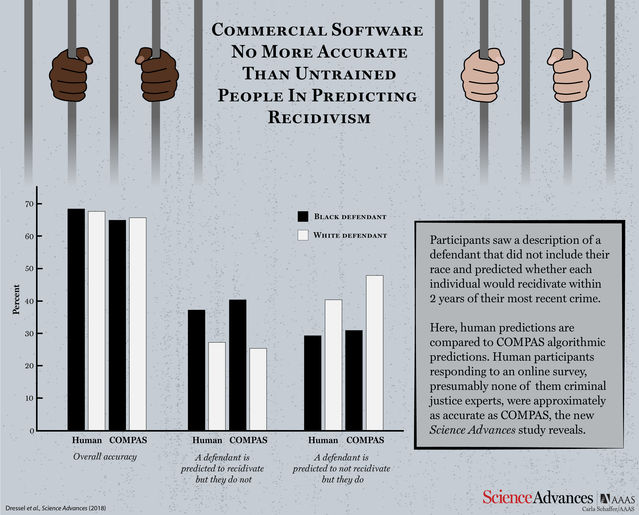

The researchers used as their main measure of accuracy the percentage of correct predictions of whether someone would recidivate or not. A wide selection of Internet users read brief descriptions of criminals that listed seven pieces of information, covering age, sex, and criminal record. The researchers also used COMPAS software to make predictions about these criminals. The algorithm inside COMPAS is unknown, but it has access to 137 pieces of data on each criminal. People and COMPAS were about equally accurate—around 65 percent. (They were about equal on more sophisticated measures of accuracy, too.)

People and COMPAS showed similar bias against blacks in the way that ProPublica pointed out—non-recidivating blacks received higher risk scores than non-recidivating whites, and recidivating whites received lower risk scores than recidivating blacks. But in terms of pure accuracy, neither people nor COMPAS showed racial bias—each was right about 65 percent of the time for both blacks and whites.

Aside from the similar accuracy and fairness of humans and COMPAS, two other surprising findings came out of the study. First, when a second group of people predicted criminal risk, this time with race included in the description (it wasn’t included for the first participants, or in the data COMPAS used), they were no more biased than when they didn’t see race.

Second, to match COMPAS, you don’t need 137—or even seven—pieces of data, or a fancy brain or algorithm. In one test, the researchers used one of the simplest machine learning algorithms (logistic regression), and only two data points per person (age and total number of previous convictions), and again their accuracy was about 65 percent. They tried a more powerful algorithm (a nonlinear support vector machine) with seven pieces of data, and it did no better. The findings emphasize one point that I’ve heard several artificial intelligence researchers make: “deep learning” (a form of AI using complicated artificial neural networks) may be hot right now, but it’s not always necessary. When in doubt, try the simplest formula with the smallest amount of data.

It should be noted, as the authors do, that predicting social outcomes is not as scientific as it’s sometimes made out to be. These algorithms weren’t predicting whether someone would actually commit another crime, but whether someone would be arrested again, and blacks are often arrested more frequently than whites for the same type of crime. So by having algorithms (or people) learn patterns based on outcomes that are subject to human bias against blacks, they can easily over-predict criminality for blacks, prompting increased law enforcement in black neighborhoods, perpetuating an unfair cycle.

Hany Farid, a computer scientist at Dartmouth who conducted the study with his (now former) student Julia Dressel, says he hopes the findings will inform courts about how much weight to place on algorithms. For example, a judge might second-guess a purportedly sophisticated system when informed that it’s no better than an Internet poll. (Or, I’d add, he might put more trust in Internet polls.) “We believe that there should be more transparency in how these algorithms work,” Farid says.

There are cases when AI will be fairer and more accurate than humans, cases when the reverse is true, and cases like this where it’s a wash. We need to keep doing studies similar to this one in order to check any assumptions about what computers can and cannot do.